Azure Cognitive Services: Speech Services

Author: Ronald Fung

Creation Date: 31 May 2023

Next Modified Date: 31 May 2024

A. Introduction

The Speech service provides speech to text and text to speech capabilities with an Azure Speech resource. You can transcribe speech to text with high accuracy, produce natural-sounding text to speech voices, translate spoken audio, and use speaker recognition during conversations.

Create custom voices, add specific words to your base vocabulary, or build your own models. Run Speech anywhere, in the cloud or at the edge in containers. It’s easy to speech enable your applications, tools, and devices with the Speech CLI, Speech SDK, Speech Studio, or REST APIs.

Speech is available for many languages, regions, and price points.

Speech scenarios

Common scenarios for speech include:

Captioning: Learn how to synchronize captions with your input audio, apply profanity filters, get partial results, apply customizations, and identify spoken languages for multilingual scenarios.

Audio Content Creation: You can use neural voices to make interactions with chatbots and voice assistants more natural and engaging, convert digital texts such as e-books into audiobooks and enhance in-car navigation systems.

Call Center: Transcribe calls in real-time or process a batch of calls, redact personally identifying information, and extract insights such as sentiment to help with your call center use case.

Language learning: Provide pronunciation assessment feedback to language learners, support real-time transcription for remote learning conversations, and read aloud teaching materials with neural voices.

Voice assistants: Create natural, humanlike conversational interfaces for their applications and experiences. The voice assistant feature provides fast, reliable interaction between a device and an assistant implementation.

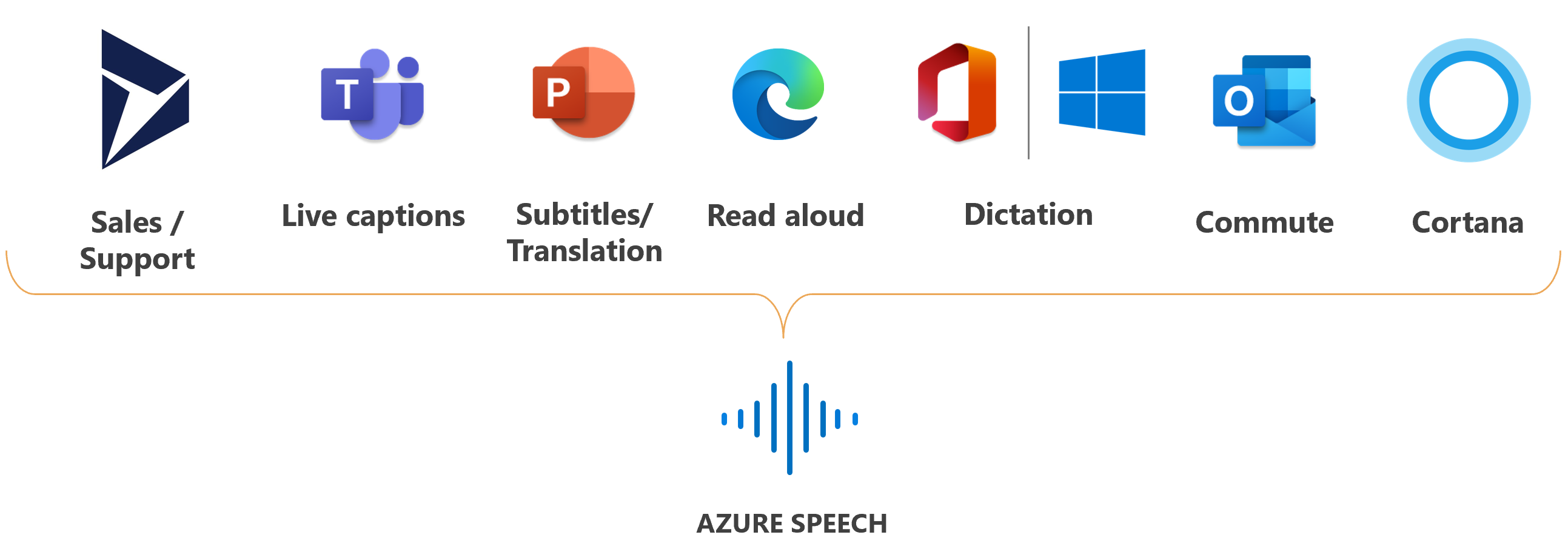

Microsoft uses Speech for many scenarios, such as captioning in Teams, dictation in Office 365, and Read Aloud in the Edge browser.

:::image type=”content” border=”false” source=”media/overview/microsoft-uses-speech.png” alt-text=”Image showing logos of Microsoft products where Speech service is used.”:::

B. How is it used at Seagen

As a biopharma research company that uses Microsoft Azure, you can use Azure Cognitive Services: Speech Services to build speech-enabled applications that can improve patient engagement and adherence. Here are some ways you can use Azure Cognitive Services: Speech Services:

Automated voice assistants: You can use Azure Cognitive Services: Speech Services to build automated voice assistants that can interact with patients and provide information about drugs, clinical trials, and other topics.

Patient reminders: You can use Azure Cognitive Services: Speech Services to build applications that can remind patients to take their medications or attend appointments using natural language.

Voice authentication: You can use Azure Cognitive Services: Speech Services to build applications that use voice authentication to verify the identity of patients, ensuring that sensitive information is protected.

Multilingual support: You can use Azure Cognitive Services: Speech Services to build applications that can understand and respond to speech in multiple languages, allowing you to reach patients around the world.

Real-time transcription: You can use Azure Cognitive Services: Speech Services to build applications that provide real-time transcription of conversations between patients and healthcare providers, enabling better communication and collaboration.

Overall, Azure Cognitive Services: Speech Services provides a powerful and flexible tool for building speech-enabled applications that can improve patient engagement and adherence. By leveraging the machine learning and AI capabilities of the service, you can build applications that are customized to meet the unique needs of your research or business, and that provide accurate, personalized responses to patients that improve patient outcomes and satisfaction.

C. Features

Azure Cognitive Services: Speech Services is a machine learning-based service that enables you to add speech-enabled capabilities to your applications. Here are some of the key features of Azure Cognitive Services: Speech Services:

Speech-to-text: Azure Cognitive Services: Speech Services can convert spoken language into written text, which can be used for transcription, translation, or other applications.

Text-to-speech: Azure Cognitive Services: Speech Services can convert written text into spoken language, which can be used for automated voice assistants, patient reminders, and other applications.

Speaker recognition: Azure Cognitive Services: Speech Services can identify and authenticate individual speakers, which can be used for voice authentication and other applications.

Customization: Azure Cognitive Services: Speech Services allows you to customize the speech models to meet your specific needs, such as by adding custom words or phrases, or training the models on your specific data.

Multilingual support: Azure Cognitive Services: Speech Services provides support for multiple languages, which allows you to build speech-enabled applications that can be used by patients or customers around the world.

Real-time transcription: Azure Cognitive Services: Speech Services can provide real-time transcription of conversations, which can be used for communication and collaboration between patients and healthcare providers.

Cost-effective: Azure Cognitive Services: Speech Services is a cost-effective solution for adding speech-enabled capabilities to your applications, as it is available as a pay-as-you-go service and can be scaled up or down as needed.

Overall, Azure Cognitive Services: Speech Services provides a powerful and flexible tool for adding speech-enabled capabilities to your applications. By leveraging the machine learning and AI capabilities of the service, you can build applications that are customized to meet the unique needs of your research or business, and that provide accurate, personalized responses to patients or customers that improve patient outcomes and satisfaction.

D. Where Implemented

E. How it is tested

Testing Azure Cognitive Services: Speech Services involves verifying that the service is accurately recognizing and synthesizing speech, and providing appropriate responses to users. Here are some steps you can take to test Azure Cognitive Services: Speech Services:

Verify configuration: Verify that Azure Cognitive Services: Speech Services is properly configured and integrated with your Azure account and resources.

Test speech recognition: Test Azure Cognitive Services: Speech Services by speaking sample phrases and verifying that the service accurately recognizes and transcribes them.

Test text-to-speech: Test Azure Cognitive Services: Speech Services by providing sample text and verifying that the service accurately synthesizes it into natural-sounding speech.

Test speaker recognition: Test the speaker recognition capabilities of Azure Cognitive Services: Speech Services by verifying that the service accurately identifies individual speakers.

Test customization: Test the customization capabilities of Azure Cognitive Services: Speech Services by configuring the speech models to meet your specific needs, and verifying that the service accurately recognizes and synthesizes speech based on your unique requirements.

Test multilingual support: Test the multilingual support capabilities of Azure Cognitive Services: Speech Services by speaking and synthesizing speech in multiple languages, and verifying that the service accurately recognizes and synthesizes speech in those languages.

Test real-time transcription: Test the real-time transcription capabilities of Azure Cognitive Services: Speech Services by having conversations and verifying that the service accurately transcribes them in real-time.

Test cost-effectiveness: Test the cost-effectiveness of Azure Cognitive Services: Speech Services by monitoring usage and costs, and verifying that the service is a cost-effective solution for adding speech-enabled capabilities to your applications.

Overall, testing Azure Cognitive Services: Speech Services involves verifying that the service is properly configured and functioning as expected, testing speech recognition and synthesis, speaker recognition, customization, multilingual support, real-time transcription, and cost-effectiveness. By testing Azure Cognitive Services: Speech Services, you can ensure that you are effectively using the service to add speech-enabled capabilities to your applications, and that you are benefiting from the accuracy, flexibility, and scalability it provides.

F. 2023 Roadmap

????

G. 2024 Roadmap

????

H. Known Issues

As with any software or service, there may be known issues or limitations that users should be aware of when using Azure Cognitive Services: Speech Services. Here are some of the known issues for Azure Cognitive Services: Speech Services:

Limited accuracy: While Azure Cognitive Services: Speech Services provides accurate results in many cases, it may not always accurately recognize or synthesize speech, particularly in cases where the speech is heavily accented or contains background noise.

Limited customization: Azure Cognitive Services: Speech Services has limited customization options, which can limit the ability of users to configure the service to their specific needs.

Limited training data: Azure Cognitive Services: Speech Services requires a large amount of high-quality training data to accurately recognize or synthesize speech, which can be difficult to obtain and may require significant time and resources.

Limited integration: Azure Cognitive Services: Speech Services has limited integration with third-party tools and services, which can limit the ability of users to incorporate it into their existing workflows.

Cost: Azure Cognitive Services: Speech Services can be expensive for users with limited budgets, particularly if they use it frequently or for large volumes of data.

Overall, while Azure Cognitive Services: Speech Services offers a powerful and flexible tool for adding speech-enabled capabilities to your applications, users must be aware of these known issues and take steps to mitigate their impact. This may include carefully configuring the service to meet the specific needs of their data, carefully monitoring the accuracy of the service to ensure that it is a good fit for their data requirements, and carefully integrating the service into their existing workflows to ensure that it is effectively utilized. By taking these steps, users can ensure that they are effectively using Azure Cognitive Services: Speech Services to build speech-enabled applications that improve patient or customer engagement and satisfaction, and that they are benefiting from the accuracy, flexibility, and scalability it provides.

[x] Reviewed by Enterprise Architecture

[x] Reviewed by Application Development

[x] Reviewed by Data Architecture