Azure Data Explorer

Author: Ronald Fung

Creation Date: 15 May 2023

Next Modified Date: 15 May 2024

A. Introduction

Data ingestion is the process used to load data records from one or more sources into a table in Azure Data Explorer. Once ingested, the data becomes available for query.

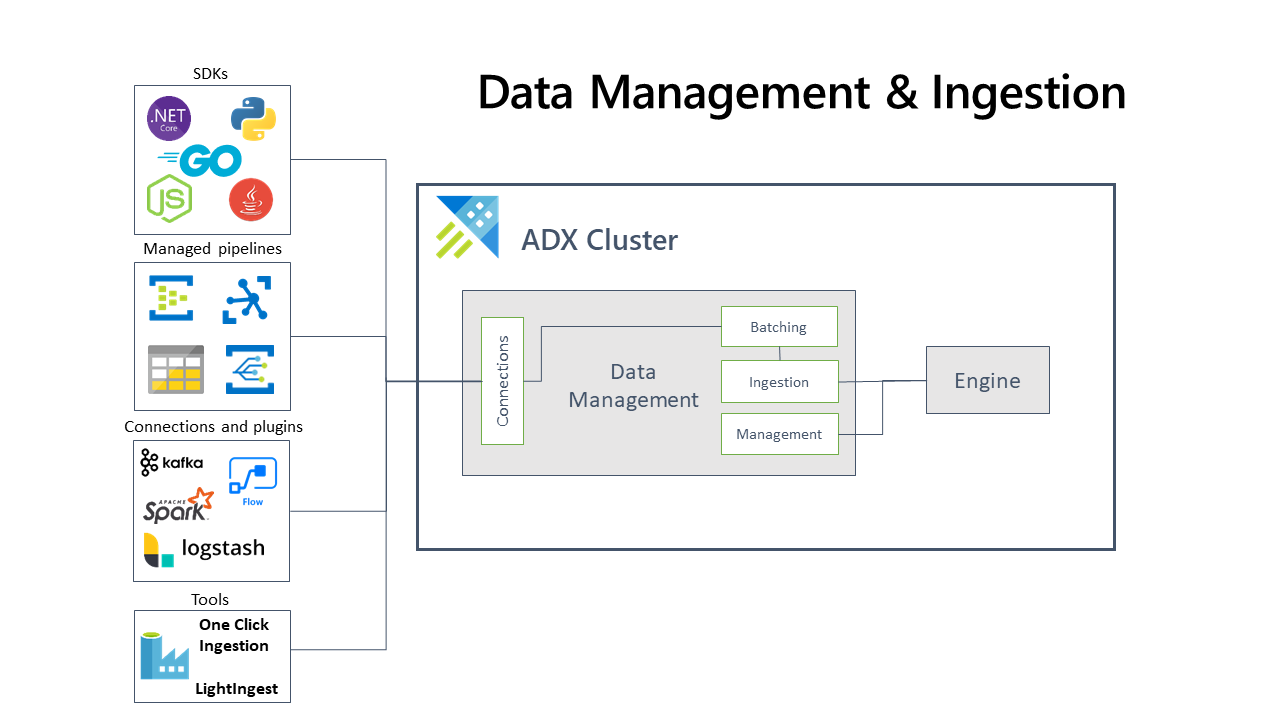

The diagram below shows the end-to-end flow for working in Azure Data Explorer and shows different ingestion methods.

The Azure Data Explorer data management service, which is responsible for data ingestion, implements the following process:

Azure Data Explorer pulls data from an external source and reads requests from a pending Azure queue. Data is batched or streamed to the Data Manager. Batch data flowing to the same database and table is optimized for ingestion throughput. Azure Data Explorer validates initial data and converts data formats where necessary. Further data manipulation includes matching schema, organizing, indexing, encoding, and compressing the data. Data is persisted in storage according to the set retention policy. The Data Manager then commits the data ingest into the engine, where it’s available for query.

B. How is it used at Seagen

As a biopharma research company, Azure Data Explorer can help you quickly and easily analyze large amounts of data generated from various sources such as clinical trials, experiments, or other research activities. Here are some ways you can use Azure Data Explorer:

Collect and ingest data: Azure Data Explorer can be used to collect and ingest large amounts of data from various sources such as IoT devices, sensors, or other applications. You can use Azure Data Explorer to ingest data in near real-time and store it for further analysis.

Analyze data in real-time: Azure Data Explorer can be used to analyze data in real-time. You can use Kusto Query Language (KQL) to write queries that analyze the data as it is ingested into Azure Data Explorer. This can help you detect anomalies, identify trends, or take other actions based on the data.

Store and archive data: Azure Data Explorer can be used to store and archive data for further analysis or long-term storage. You can use Azure Blob Storage or Azure Data Lake Storage to store data that is ingested into Azure Data Explorer.

Integrate with other systems: Azure Data Explorer can be used to integrate with other systems and applications used in your research process. You can use Azure Logic Apps or Azure Functions to trigger actions or exchange data between different systems.

Secure and scalable data processing: Azure Data Explorer can be used to process sensitive data securely and at scale. You can use Azure Active Directory to manage access to Azure Data Explorer and use Azure Autoscale to automatically scale up or down based on the workload.

C. Features

Supported data formats, properties, and permissions

Supported data formats: The data formats that Azure Data Explorer can understand and ingest natively (for example Parquet, JSON)Ingestion properties: The properties that affect how the data will be ingested (for example, tagging, mapping, creation time).Permissions: To ingest data, the process requires database ingestor level permissions. Other actions, such as query, may require database admin, database user, or table admin permissions.

Batching vs streaming ingestion

Batching ingestion does data batching and is optimized for high ingestion throughput. This method is the preferred and most performant type of ingestion. Data is batched according to ingestion properties. Small batches of data are then merged, and optimized for fast query results. The ingestion batching policy can be set on databases or tables. By default, the maximum batching value is 5 minutes, 1000 items, or a total size of 1 GB. The data size limit for a batch ingestion command is 6 GB.

Streaming ingestion is ongoing data ingestion from a streaming source. Streaming ingestion allows near real-time latency for small sets of data per table. Data is initially ingested to row store, then moved to column store extents. Streaming ingestion can be done using an Azure Data Explorer client library or one of the supported data pipelines.

Ingestion methods and tools

Azure Data Explorer supports several ingestion methods, each with its own target scenarios. These methods include ingestion tools, connectors and plugins to diverse services, managed pipelines, programmatic ingestion using SDKs, and direct access to ingestion.

For a list of data connectors, see Data connectors overview.

D. Where Implemented

E. How it is tested

Testing Azure Data Explorer involves ensuring that the database service is functioning correctly, securely, and meeting the needs of all stakeholders involved in the project. Here are some steps to follow to test Azure Data Explorer:

Define the scope and requirements: Define the scope of the project and the requirements of all stakeholders involved in the project. This will help ensure that Azure Data Explorer is designed to meet the needs of all stakeholders.

Develop test cases: Develop test cases that cover all aspects of Azure Data Explorer functionality, including database creation, data insertion, retrieval, and management. The test cases should be designed to meet the needs of the organization, including scalability and resilience.

Conduct unit testing: Test the individual components of Azure Data Explorer to ensure that they are functioning correctly. This may involve using tools like PowerShell or Azure CLI for automated testing.

Conduct integration testing: Test Azure Data Explorer in an integrated environment to ensure that it works correctly with other systems and applications. This may involve testing Azure Data Explorer with different operating systems, browsers, and devices.

Conduct user acceptance testing: Test Azure Data Explorer with end-users to ensure that it meets their needs and is easy to use. This may involve conducting surveys, interviews, or focus groups to gather feedback from users.

Automate testing: Automate testing of Azure Data Explorer to ensure that it is functioning correctly and meeting the needs of all stakeholders. This may involve using tools like Azure DevOps to set up automated testing pipelines.

Monitor performance: Monitor the performance of Azure Data Explorer in production to ensure that it is meeting the needs of all stakeholders. This may involve setting up monitoring tools, such as Azure Monitor, to track usage and identify performance issues.

Address issues: Address any issues that are identified during testing and make necessary changes to ensure that Azure Data Explorer is functioning correctly and meeting the needs of all stakeholders.

By following these steps, you can ensure that Azure Data Explorer is tested thoroughly and meets the needs of all stakeholders involved in the project. This can help improve the quality of Azure Data Explorer and ensure that it functions correctly in a production environment.

F. 2023 Roadmap

????

G. 2024 Roadmap

????

H. Known Issues

There are several known issues that can impact Azure Data Explorer. Here are some of the most common issues to be aware of:

Query performance issues: Query performance issues can arise when queries are not optimized. It is important to ensure that queries are optimized to avoid slow query times and improve performance.

Partitioning issues: Partitioning issues can arise when partition keys are not chosen carefully. It is important to ensure that the partition key chosen is evenly distributed and can handle the anticipated workload.

Data accuracy issues: Data accuracy issues can arise when the data is not updated in real-time. It is important to ensure that data is updated in real-time to avoid data accuracy issues.

Security issues: Security is a critical concern when it comes to Azure Data Explorer. It is important to ensure that Azure Data Explorer is secured and that access to the solution is restricted to authorized personnel.

Integration issues: Integration issues can arise when integrating Azure Data Explorer with other systems and applications. It is important to ensure that Azure Data Explorer is designed to work seamlessly with other systems and applications to avoid integration issues.

Compatibility issues: Azure Data Explorer may not be compatible with all platforms, devices, or languages. It is important to ensure that Azure Data Explorer is compatible with the organization’s existing infrastructure before implementation.

Testing issues: Testing issues can arise when testing Azure Data Explorer. It is important to ensure that testing is carried out thoroughly and that all aspects of Azure Data Explorer functionality are tested.

Overall, Azure Data Explorer requires careful planning and management to ensure that it is functioning correctly and meeting the needs of all stakeholders involved in the project. By being aware of these known issues and taking steps to address them, you can improve the quality of Azure Data Explorer and ensure the success of your project.

[x] Reviewed by Enterprise Architecture

[x] Reviewed by Application Development

[x] Reviewed by Data Architecture